SoloScaleAI - When Do I Get My One Click Enterprise?

Why You Should Keep Reading

Control Image Composition In Midjourney

Are There Any Moats For AI Businesses?

Large Language Model Bootcamp (FREE)

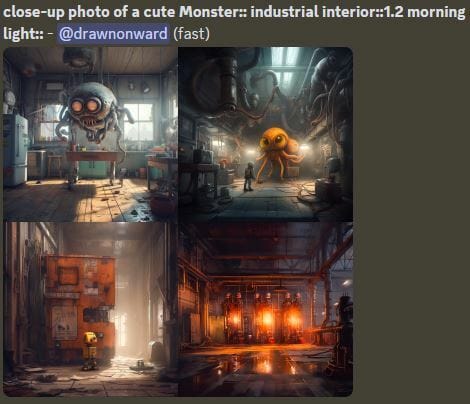

How To Control Image Composition In Midjourney Using Sliders And Cowbells

With AI image “generators” like Midjourney you never quite know what you’re going to get. Sometimes that’s fun and other times it’s valuable since it turns up crannies of creativity you might never have visited. But when you have an idea of what you want you’re going to want to exert some editorial control.

You can manipulate the prompt language itself, but the results are very unpredictable, especially when you want to control how more than one thing relate to each other. In Midjourney 5 there are some controls you can learn. Here we’ll get a sense of what sliders and cowbells do.

Normally a prompt is just a gnarly sentence. Midjourney recognizes “cowbells” (as in “give me more…”) when you simply repeat a word to emphasize it. In this sequence we will turn “cute monster” into “cute cute cute monster” to get something with a bigger head relative to its body and/or limbs, generally bigger eyes, etc.

We also turn the normal prompt into separate sliders using the double colon “::”. The default weight to a slider is 1, so if you leave it blank that’s what you get. Still, breaking the prompt into sliders already alters the relative weight of different ideas since it forces Midjourney to think in a certain way.

Breaking the normal prompt into sliders already changes the probability that Midjourney will show more of the industrial interior.

Using cowbell on “cute” gets us a more chibi looking monster. However, in the original prompt Midjourney is still often deemphasizing the industrial interior.

If we alter the monster slider to 2 (default if empty is 1) we get a lot more monster and a lot less interior. We might not even get any recognizable interior at all.

Sliders are unpredictable, just like everything in Midjourney. Setting monster back to 1 and setting interior to 2 we completely eliminate the monster. I dunno, maybe it’s in there somewhere?

But they’re called “sliders” for a reason. We just change the weighting value a bit until we get something that is likely to have the composition we’re after. In this case, more of an emphasis on the setting.

And if we combine the cowbell and slider we can get a (relatively?) cute monster and a lot of the interior visible at the same time.

Where Are The Moats for AI Businesses?

Jay Alammar starts with a16z’s map of the generative AI tech stack, but decides it isn’t detailed enough, so he fleshes it out with several extra areas. You should read the whole thing to get his reasoning and links to additional reading. Here are some takeaways:

If your business is in the application layer, consider going a layer deeper by building your own custom fine-tuned version of a foundation model. This can be as easy as uploading a single file if you’re using the right managed language model provider, so you can experiment with many different fine-tuned versions. For example, Lensa.ai makes one for each paying user.

If your business uses AI, you have to collect feedback. Data, particularly closed-loop data, is what makes these models work. Get that feedback somehow. It can be as simple as Grammarly’s little buttons like “incorrect suggestion”.

Consider making your model’s generations public so that users can learn from each other what the app/model is capable of and where to go next. For example, Midjourney makes generated images public by default, which inspires and educates many new users.

Jay wraps up with these pockets where businesses can find a competitive advantage: Distribution, Proprietary Data, Domain Expertise, Network Effects, Collections of Prompts, Private Fine-Tuned Models, Data Acquisition and Labeling,

Free LLM Bootcamp from Full Stack Deep Learning

This FREE “course” is packed with useful information about LLMs, what they really are, how to use them, and how to break them.

Here are some quick tips:

In general, to get better results out of an LLM, you want to eliminate ambiguity. A big part of prompt engineering is just realizing that the LLM didn’t understand how to differentiate what you want from all the other possibilities. Breaking down problems into smaller pieces is a great start. So is rephrasing it, or providing an example of correct output.

You can prompt most LLMs to turn structured pseudocode into code. It works especially well if you surround the pseudocode in triple backticks because they’re trained on a lot of data from Github which deeply embeds that convention into their training.

You can improve LLM results with Chain of Thought, where you prompt the LLM to “think through” each step working up to the final answer. This can be as simple as adding Q: whatever A: Let’s think step by step to the prompt so that the LLM is encouraged to finish a sentence that started with “Let’s think step by step…”.

Pseudocode example:

```

Python stuff

def myFunction

count from 1 to 100

print

```About SoloScaleAI

The new wave 🌊 of AI tools, exemplified by ChatGPT, are absurdly powerful 💥 and we’ve barely figured out 🤔 what they can do. I believe it will soon ⌛ be possible for a single person 🙋♀️ to orchestrate a $100M 💰 enterprise. Starting in 2024 the wave will turn into a tsunami 🌊🌊🌊 of solo and small team entrepreneurs outcompeting legacy 🏛 companies. 👆 SoloScaleAI is dedicated to exploring this fantastic 🤯 opportunity; maybe even trying to sneak a peek past the event horizon 💫. Join in!